Test Implementation

4.1 Test Environment

Most (10) testers used their own proprietary testing platform to execute the tests. Installing these within the deployer’s network was difficult within the short timeframe of the pilot. However, this option was still feasible if

- The GenAI application allowed external access via API, and/or

- Relevant input/ output/ trace data could be shared externally by deployer with appropriate anonymising/ safeguards

In the remaining instances, a mix of bespoke testing scripts and tester’s existing testing libraries were used. In at least two cases, the tester was onboarded by the deployer into a staging environment for the testing exercise.

4.2 Test data and effort

Given the time spent upfront to define “what to test” and “how to test”, limited time was available for actual test execution. As a result, test sizes were relatively small. Most testers used a few hundred test cases, though two (PRISM Eval and Verify AI) went into tens or even hundreds of thousands.

The effort needed from the deployer and tester teams varied. Many required a total of 50-100 hours’ worth of effort over 2-4 weeks, though a few required hundreds. Infrastructure and LLM costs were inconsistently shared, but do not appear to have been significant in the context of this limited pilot period.

4.3 Implementation challenges

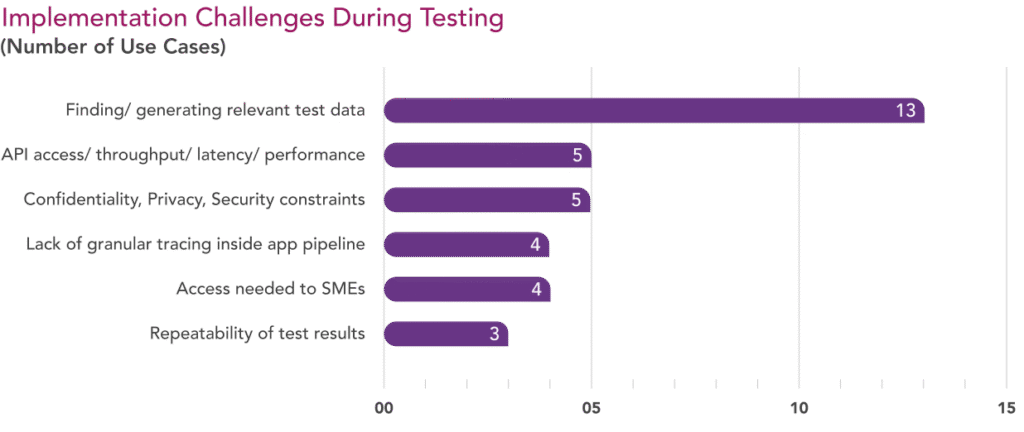

The difficulty of finding, or generating, test data that is realistic, able to cover edge cases and anticipate adversarial attacks, was seen as a common challenge by most pilot participants.

Beyond that, testers also found the following aspects challenging:

- Confidentiality, Security and Privacy constraints: impacted access to relevant test data, system prompts and even the actual application.

- API Access, Throughput, Latency and Performance.

- Lack of granular tracing access inside the application pipeline: resulting in limited ability to test and debug at interim points.

- High demand for access to human subject matter experts: e.g., to annotate “ground truth” or calibrate results of automated testing.

- Lack of consistency: Differences in response from the same application, to the same input, making it difficult to create consistent test results.

Some testers were also concerned about not sharing too much publicly on their proprietary testing approach (particularly for adversarial benchmarking and red-teaming efforts) or on the internal architecture of the applications being tested. These concerns have been incorporated when drafting the report.